Job Hunt as a PhD in AI / ML / RL: How it Actually Happens

← HomeCombine a supposedly good tech hiring market with a Ph.D. from Berkeley AI Research and let's see what we get. In the vein of transparency, I wanted to share my experience in the job search and some notes specific to reinforcement learning as an area. Is there something you want to know about and I didn't share? Please let me know (note, I won't directly share the compensation of the offers I received, please see aipaygrad.es).

My goals for the job search were:

- to get a Research Scientist position at a top lab in industry. This constituted my 1-5 year career plan so it was a pretty clear goal for me. It has never been clear to me the path to getting offers for these roles, but I felt like my internships meant that I was close. My understanding is at some organizations it is nearly entirely who you know and some institutions are the opposite. A second point of consideration is how important having a post doc is (which I do not), but I made it.

- to learn about applied roles in the area of my Ph.D. (reinforcement learning & robotics). In the future I want to build systems, so cultivating relationships with applied labs makes sense.

This post is broken into a few major sections (that may feel somewhat disjointed), and if you're reading on a computer you should be able to see the TOC to the right.

- The raw data of my job search: how many applications, how many calls, how many offers, timeline.

- Job process reflections and advice: I share my biggest takeaways with the process, how I may prepare in the future, and what the other side is like (for current students reading this).

- RL reflections: some specific writing on how hiring for reinforcement learning jobs happens. TL;DR is that people don't really know how to evaluate us yet, but they're really trying.

The data

The last 3 years or so have been a journey of me trying to "make it" in AI and get an offer for one of these positions. My path into AI occurred entirely in the course of my Ph.D. through the many transitions of systems engineer to roboticist to machine learner (for those wanting to repeat that, read my tips here -- though it is very hard / requiring great luck). With a research scientist position, I could do good work, continue to build the foundation of my career, and hopefully feel like I truly belong.

I considered myself a great, but not perfect candidate. In general, I had the big picture going for me: top school, hot topic area in RL, industry internships, decent publication record. The items that I lacked included having a famous advisor in AI or really famous papers. I am in a very privileged position to really list those weaknesses, I get it.

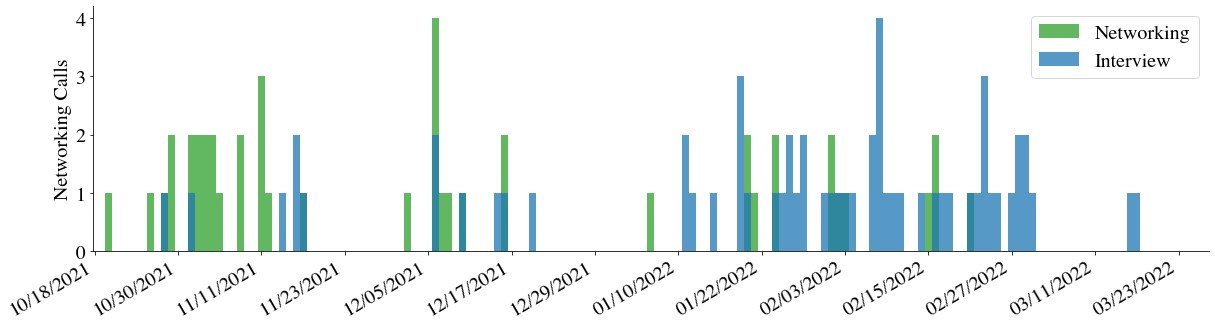

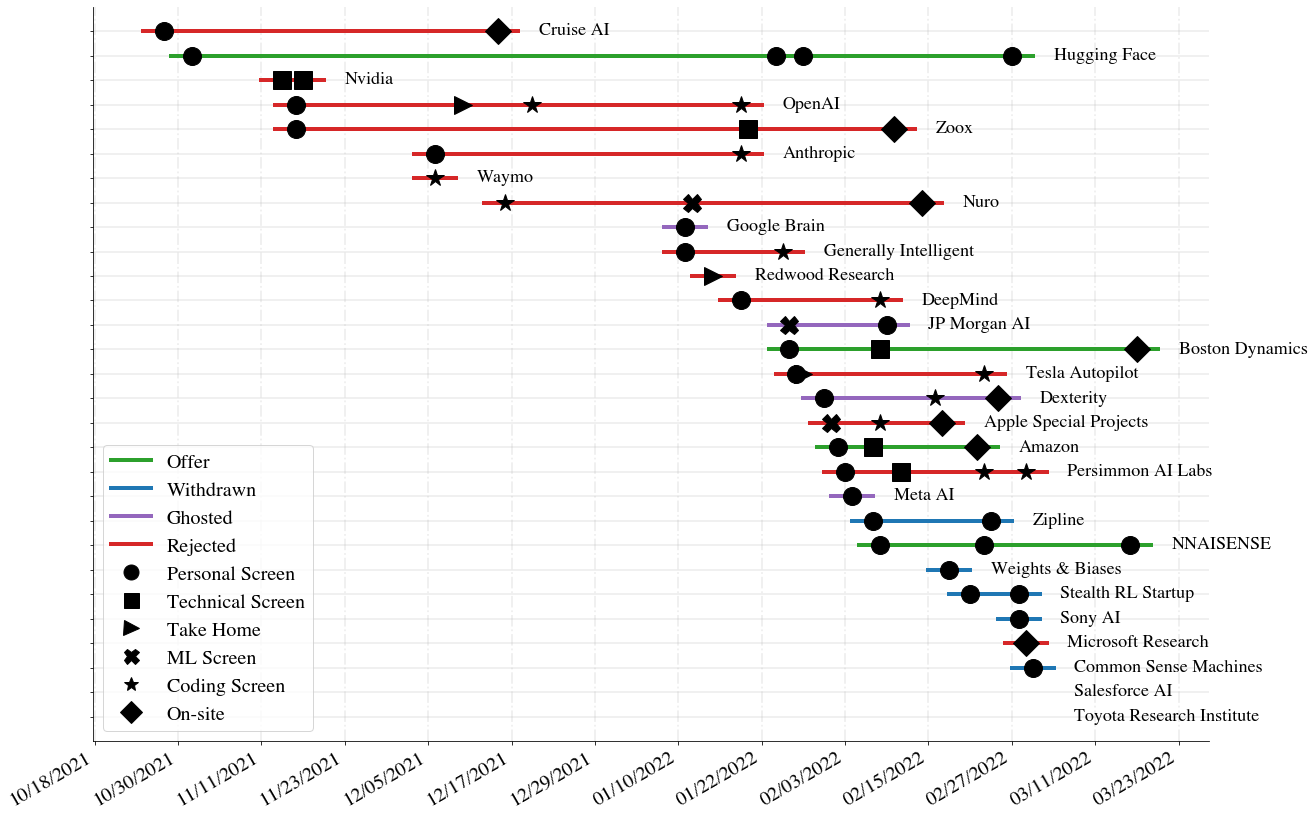

The result was plus or minus 53 one-off interviews, 46 networking calls, 6 (virtual) on-site interviews, and 4 offers from 30 companies I contacted or applied to. Here is a visualization of the timeline. I announced I was starting the process on 26 Oct. 2021, which started the influx of emails and messages. These conversations were a fantastic way to learn what is out there without the constant pressure of interviews. Some of these transitioned into early interviews, but really I had a networking phase followed by an interview phase (I was trying to interview earlier, it seemed like most companies were somewhat waiting until 2022).

I ended up with 4 somewhat different offers (in order of date received). All of the offers I received seemed like very good technical matches and it came down to who I was working with and where my life would be:

- HuggingFace -- Research Scientist: an offer to join as the one / one of few RL researchers. I was told I need to take on a role of education my colleagues and collaborating on non-RL projects. This was exciting for me, as I also get to try my hand at what I consider a more stable area of ML research -- NLP.

- Amazon Robotics & AI -- Applied Scientist II: an interesting role where I would be doing something along the line of RL / offline RL / continual learning on Amazon's 1000s of deployed fulfillment center robots. I would have learned a lot, but the structures were not clearly in place to continue being a leader in the academic community.

- Boston Dynamics -- Research Scientist, Reinforcement Learning: this offer was very exciting. I came close to taking it, but the compensation was weirdly structured. The role would've been leading a new RL team to help translate cutting edge RL research to new applications on the Atlas and Spot robots.

- NNAISENSE (pronounced nascence)-- Research Scientist: a company with an impressive amount of expertise in model-based RL, but not a lot of transparency on how the business is doing / growing. At the end of the day I wasn't ready to move full-time to Switzerland, but I really enjoyed all these conversations and would consider joining in the future.

Job process reflections

In general, the job search process was very empowering for me. If you approach it with an open mind it really can be a high-leverage use of time for the next stage of your career.

- High yield: If you're at a good school, the response rate is astounding. I think every company I reached out to (including any job posting I was remotely qualified for online) got back to me with at least an email. I did not need to take the firehouse approach in the slightest.

Hopefully, this can let candidates spending more time reflecting and doing interview prep rather than logistical nightmares. - Waiting game: rejections always will come first. It takes a lot longer to get to the accept phase. There are clearly patterns where you are not the top choice for a company, so you don't hear back for a few weeks. This is okay and expected.

Alternatively, sometimes certain companies have total headcount locks mid cycle. It is good to chat with other people in your area who are applying, so you know for sure it is not just you. - Networking value: AI research is a really small world. The quality of people you get to talk to in this process is amazing. It's a fun process and I think I extracted a ton more long-term value than just my next job.

- Research scientist titles: this title is carrying a lot of prestige for some reason. At larger orgs the differences between engineers and scientists on paper changes promotion paths, compensation, etc., but in terms of the work done they're very similar on moderately sized projects. I think companies like OpenAI have a good title as "Member of Technical Staff" with multiple recruitment pipelines.

- Should I do a postdoc?: there is definitely a good time for a post doc. I don't regret avoiding them, but I do wish I was in a more stable mental place to consider them. I think the default answer to the question of "should I do a postdoc" should be no for people that have industrial inclinations, but... the right postdoc can open so many doors. The right post doc is a safe space to show the world how amazing you are and flesh out your vision. They'll give you no pressure on leaving and many opportunities to grow. I think these are rare, but sound amazing.

Interview preparation

I bet this is the section where I will get the most interest, but honestly I still don't have any world shattering advice. I can list some of the types of questions I saw a lot, but specific action items for prep are hard to give.

Interview styles per role

Here's the breakdown of titles I was interviewing for -- 12 Research Scientist, 2 Researcher, 2 ML Engineer, 1 Deep Learning Engineer, 1 RL Engineer, 1 Control Engineer, and 7 unknowns (actually more RS than I thought, some of those really weren't writing papers). Here's some buckets I felt:

- The paper-writing research scientist interviews: This feels like how the faculty search was described to me. These are all about evaluating your vision, your place in the broader research community, and ability to work with the team.

- The product-focused research scientist interviews: A mix between faculty search self-promotion and practical engineering questions. Feels like they created this title for the Ph.D. student to feel special, but in reality they will do the same work as other engineers.

- The machine learning engineer interviews: For roles without the research angle, I felt like I was pushed more on practical problems. More machine learning fundamentals interviews that I have no idea how I remember any of it (things like logistic regression, probability fundamentals, vector calculus, and math puzzles). Sometimes (e.g. Apple) I had like 3-5 coding interviews. It was so many that I knew I didn't want to do that job.

- The robotics engineer interviews: these take me back when I was an electrical engineer and I got fun systems questions. Also all of the interviews were extremely specific to robotics and spent less time talking about machine learning or reinforcement learning in general.

The most common questions

- What do you want to work on when you show up? Who do you want to work with? If you had unlimited compute what would you work on?

- What was a past project you worked on? What was the impact? What did you learn?

- What styles of communication do you prefer? Do you work in teams? Do you value mentorship?

Actionable tips

- If you have the bandwidth, more coding practice will definitely help. I signed up for LeetCode premium for a few months, and this was the best preparation I did. Reading The Book is so dry that it's hard to make substantial progress. I did not do that much and made it.

- An increasing number of companies are doing "ML Background" interviews that are mostly math tricks and basic ML tradeoffs. Preparing for this is hard, but studying some coursework would help. I didn't.

- Spend a lot of time on your job talk. It's not about technical content, but conveying a vision and a story. Honestly, the more feedback I got, the fewer plots I felt like I should have in my talk. Magic move is the best transition.

- Write out a research agenda with timelines and goals. It can make you understand what starting a new process will look like.

- You can say no to companies. If they're doing something ridiculous like not communicating the schedule or saying they only have 1 timeslot for an interview, ask them to make space for you. The worst case is they can't accommodate but the vast majority of times they want it to be a positive experience for you.

- Ask what to prepare for interviews. They will often say "leetcode," but sometimes the recruiter actually will give you a topic area like threading or object oriented programing.

Some benefits of having a job!

It's really amazing having a job. Finishing my ph.d. has given me a little clarity on what waits on the other side for my colleagues who are finishing soon and have been told that it's easier to be happy on the other side.

- Exit paths: You can easily change a job, you can't easily move your Ph.D. A Ph.D. is likely the most high stakes job of your life, because if it turns sour you are sacrificing a lot of time to move on. It's wild because most people decide who to work with right after undergrad. My bet would be that people who work for a few years ahead of graduate school have a bit of a better playbook for choosing the right lab. The exit paths also remove the advisor-student power dynamics that plague graduate school -- your manager now needs to invest in you so that they can keep you around and doing your awesome work.

- A team: The set of colleagues you have access to is amazing. I joined a research team where I am on the younger side of those with a Ph.D. The breadth of experiences I can easily draw on and engage with makes it a lot easier for me to visualize my next few years and what success looks like. When interviewing this is hard to see, but once you start it should be clear that there are systems in place to help you succeed.

- Balance: Work life balance is really strong. Most companies actually respect your life and time.

Reinforcement learning reflections

"We solved perception, but our bots keep crashing."

There is a growing optimism -- or maybe more realistically and surprisingly a need for -- reinforcement learning expertise. The most expansive robotic platform in the real-world today is autonomous vehicles. Among these labs, very good perception APIs have been left to control teams. These control engineers have been struggling to develop existing stacks to cover the long-tail of potential problems one encounters when driving in SF. RL practitioners step in with mind- and tool-sets for creating machine-learning based decision making systems.

In many cases I think the technology would involve integrating specific model learning to areas of confusing control performance with high value placed on robustness. With this in mind, most companies happily entertained far out ideas such as "AlphaZero for AVs" or "RL as an adversary for reliability testing." One of my evaluation metrics for a company is how they responded to the question of how they intend on dealing with new and continuous data from partners / deployed robots -- my idea of how all real world data becomes ML.

We'll see in the next few years how these ideas play out, but big players are moving fast in the space. Tesla is growing their RL team, and I do think they generally try and build things that "work" (but often leaves a lot to be desired in terms of evaluating its impact and being transparent on its capabilities). DeepMind seemingly continues to hire everyone I've looked up to that comes onto the job market in RL, so their big projects won't slow at all.

The billion+ dollar question is how big of a data and training scale is needed for something like MuZero to work. We've seen that at maximum scale it is amazing. EfficientZero started to peel that back. So many companies have wanted to try MuZero, so I think we'll know in a couple years.

Evaluating RL candidates

I think I've finally reached the chicken and egg problem of a) company wants to hire experts in niche new field, but b) has no one yet so how do you evaluate candidates.

A few of the things I encountered...

- I had a lot of RL interviews, and some of them were suspect. Things like "write down the equation for Q learning" or "implement gradient descent" are normal. Cruise gave me a good reward shaping question (Thanks Alex).

- Tesla also asked a pretty good RL question. It was a big code skeleton to be completed so less pressure on remembering all the details. I think this model where you need to implement a couple lines, understand existing code, and eliminate a bug maps a lot better to real engineering practice.

Prestige, signal, and noise

A large undercurrent of this post is how helped I am by having the @berkeley.edu email address. My job search would've been very different without this. I think there is an optimum for every candidate on the spectrum of how many emails they should send versus how likely a response is. If you don't expect a response, you probably should spend more time building each on up (by doing more background, building, or networking).

AI is certainly a community driven by prestige. It may not have been a decade ago, but now with the financial upside of it, people are drawn to it for non-scientific reasons. Surely this has impacted me, but I am happy to say that my job decision was not because of the money.

Finally, on noise. This post is my specific experience. Even if you are a really similar candidate with similar career goals, this post is already 6months+ out from when I was searching. No process will be repeatable, but the themes will continue to unfold.